College student put on academic probation for using Grammarly: ‘AI violation’

College student put on academic probation for using Grammarly: ‘AI violation’

nypost.com

College student put on academic probation for using Grammarly: ‘AI violation’

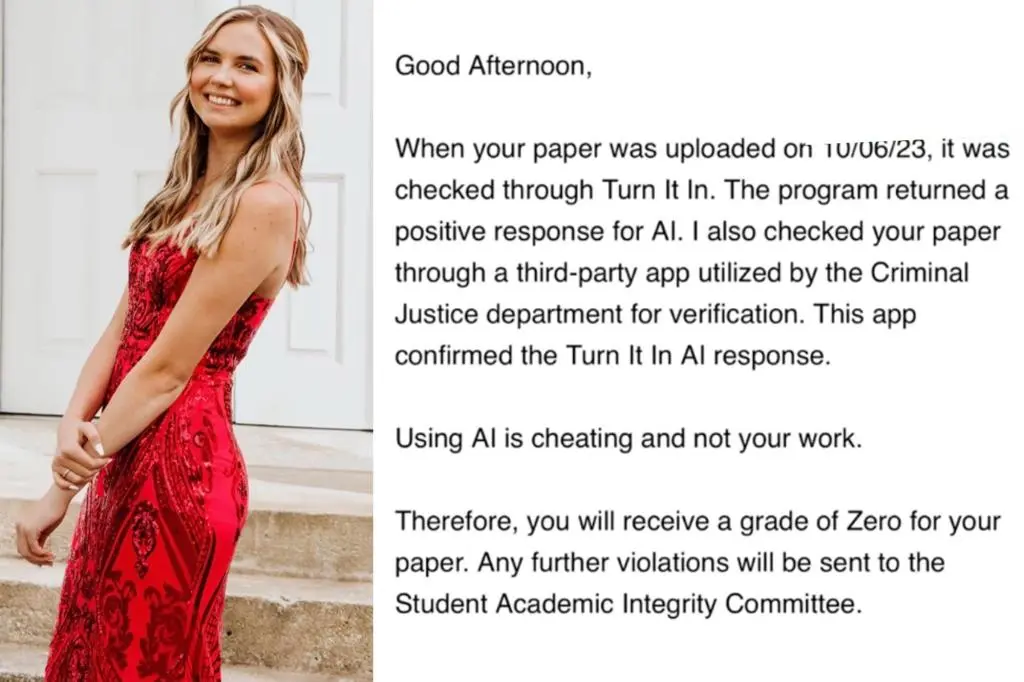

College student put on academic probation for using Grammarly: ‘AI violation’::Marley Stevens, a junior at the University of North Georgia, says she was wrongly accused of cheating.