What about throughput, latency, schema modeling, query load balancing/routing, confidentiality, regulatory compliance, operational tooling? How easily can I write a CRUD or line of business service using it?

- Posts

- 33

- Comments

- 208

- Joined

- 3 yr. ago

- Posts

- 33

- Comments

- 208

- Joined

- 3 yr. ago

Programming @programming.dev SBOM - Sandwich Bill of Materials

homeassistant @lemmy.world How's your HA voice assistant going?

Programming @programming.dev Worst of Breed software

Programming @programming.dev Logging Sucks - A review of challenges with logging

Linux @programming.dev Low FPS in Firefox on one monitor

Programming @programming.dev Your data model is your destiny

homeassistant @lemmy.world Nabu Casa - Ending production of Home Assistant Yellow

Programming @programming.dev Docker/OCI Image Registry Explorer - Explore OCI images using a web page

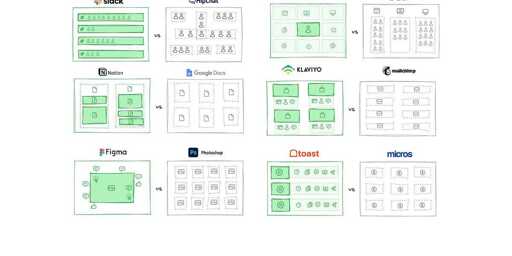

Programming @programming.dev You Don't Need Animations - Purposeful Animations

homeassistant @lemmy.world What's your most pointless or silliest automation?

homeassistant @lemmy.world Companion app for Android: It’s been a while

homeassistant @lemmy.world Zooz joins Works with Home Assistant

Linux @programming.dev Comparison of video game performance of Windows 11 vs SteamOS/Linux on the Lenovo Legion Go S

Technology @lemmy.world Deep Dive on Google's TPU (Tensor Processing Unit)

homeassistant @lemmy.world Release 2025.6: Getting picky about Bluetooth

Programming @programming.dev How GitLab decreased repo backup times from 48 hours to 41 minutes with a fix to Git

homeassistant @lemmy.world Home Assistant - Deprecating Core and Supervised installation methods, and 32-bit systems

Programming @programming.dev GitHub is introducing rate limits for unauthenticated pulls, API calls, and web access

Programming @programming.dev Parsing CSV at 21 GB/s Using SIMD on AMD 9950X in .net

Games @lemmy.world Cozy video games can quell stress and anxiety

I wish I had some water meters that I could monitor to take advantage of the Energy dashboard, but sadly I don't have a submeter I can access.

Home Assistant just keeps methodically getting better!