Your first two paragraphs seem to rail against a philosophical conclusion made by the authors by virtue of carrying out the Turing test. Something like "this is evidence of machine consciousness" for example. I don't really get the impression that any such claim was made, or that more education in epistemology would have changed anything.

In a world where GPT4 exists, the question of whether one person can be fooled by one chatbot in one conversation is long since uninteresting. The question of whether specific models can achieve statistically significant success is maybe a bit more compelling, not because it's some kind of breakthrough but because it makes a generalized claim.

Re: your edit, Turing explicitly puts forth the imitation game scenario as a practicable proxy for the question of machine intelligence, "can machines think?". He directly argues that this scenario is indeed a reasonable proxy for that question. His argument, as he admits, is not a strongly held conviction or rigorous argument, but "recitations tending to produce belief," insofar as they are hard to rebut, or their rebuttals tend to be flawed. The whole paper was to poke at the apparent differences between (a futuristic) machine intelligence and human intelligence. In this way, the Turing test is indeed a measure of intelligence. It's not to say that a machine passing the test is somehow in possession of a human-like mind or has reached a significant milestone of intelligence.

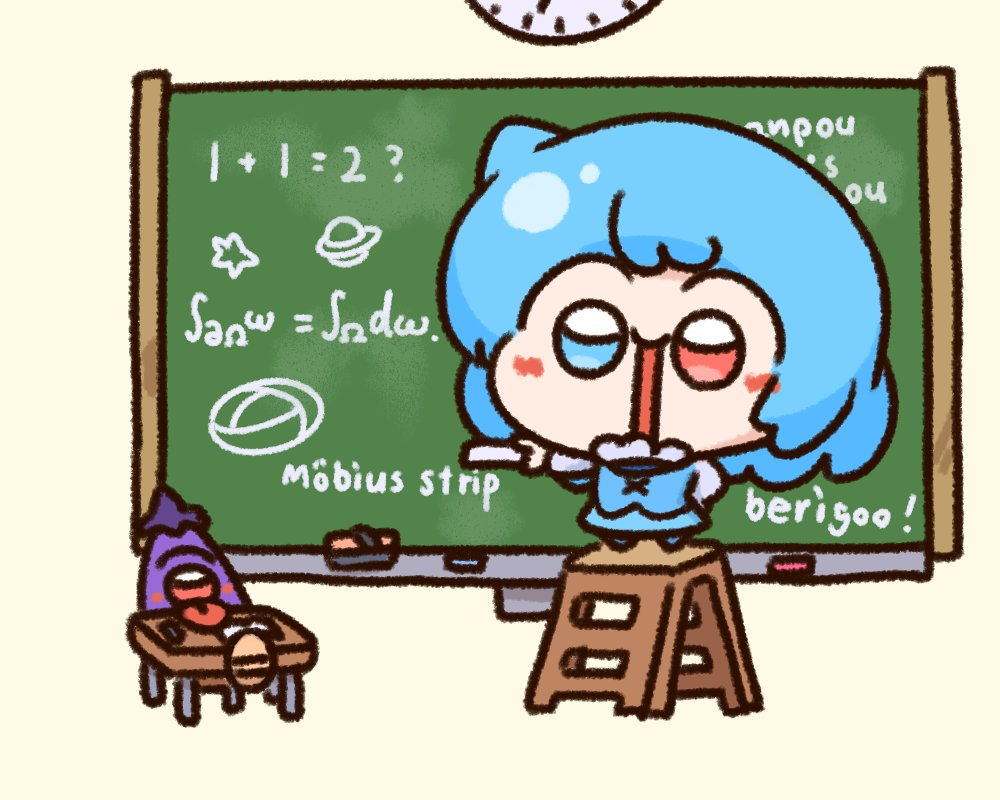

Stokes' theorem. Almost the same thing as the high school one. It generalizes the fundamental theorem of calculus to arbitrary smooth manifolds. In the case that M is the interval [a, x] and ω is the differential 1-form f(t)dt on M, one has dω = f'(t)dt and ∂M is the oriented tuple {+x, -a}. Integrating f(t)dt over a finite set of oriented points is the same as evaluating at each point and summing, with negatively-oriented points getting a negative sign. Then Stokes' theorem as written says that f(x) - f(a) = integral from a to x of f'(t) dt.