The article says they suspect this was done by people who have an interest in hunting, since those people often complain that the eagles target birds like pheasants.

- Posts

- 30

- Comments

- 704

- Joined

- 2 yr. ago

- Posts

- 30

- Comments

- 704

- Joined

- 2 yr. ago

Today I Learned @lemmy.world TIL if you live in Pennsylvania and make minimum wage you'd have to work 105 hours a week to afford a "modest" one bedroom rental.

Memes @lemmy.ml Scalable

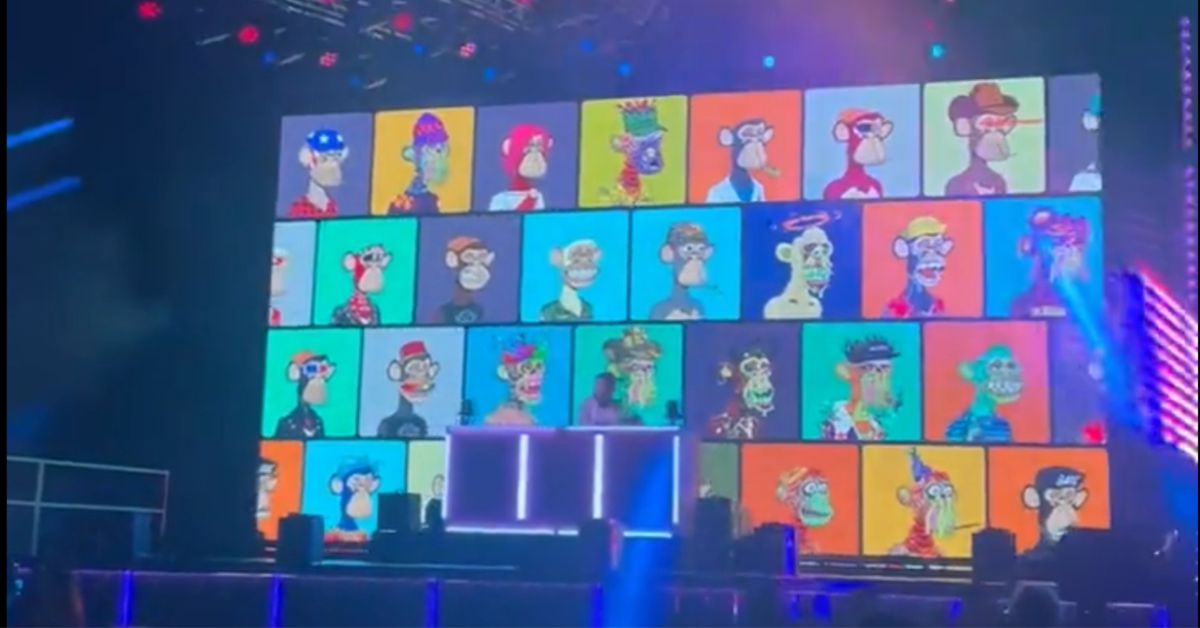

Technology @lemmy.world Attendees at Bored Ape NFT event report vision loss and extreme pain

politics @lemmy.world Universal Basic Income: In July three Pennsylvania lawmakers said they were going to introduce a bill calling for study of UBI. Has anyone seen updates since then?

politics @lemmy.world House speaker elections can be better with ranked choice voting

Political Memes @lemmy.world "All the land was claimed by others before we were born"

Ask Lemmy @lemmy.world How many patients can one doctor take care of per year? How many people can one farmer feed a year?

Memes @lemmy.ml School lunch debt, what about normal lunch debt?

Showerthoughts @lemmy.world Was thinking about how sometimes a therapist can give bad advice, and if you're not thinking about the situation clearly, how would you know? Clearly...

Memes @lemmy.ml Why won't they stay where they belong?

I think this is a misread of the article. They don't seem to be suggesting any actual solution, and only mention "populist rule" in passing with no specifics.

But they do seem to be blaming the left for not doing anything about the problem. And I thought it was funny how at the top they were like "even liberals like Roberts"